- Home

- »

- Next Generation Technologies

- »

-

Content Detection Market Size, Share, Industry Report, 2030GVR Report cover

![Content Detection Market Size, Share & Trends Report]()

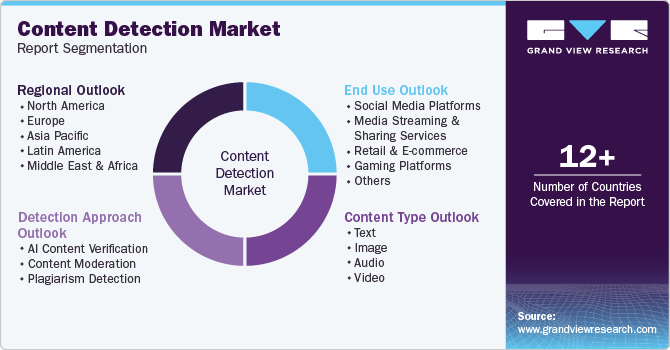

Content Detection Market (2025 - 2030) Size, Share & Trends Analysis Report By Detection Approach (AI Content Verification, Content Moderation), By Content Type (Text, Image), By End-use, By Region, And Segment Forecasts

- Report ID: GVR-4-68040-553-2

- Number of Report Pages: 200

- Format: PDF

- Historical Range: 2018 - 2023

- Forecast Period: 2025 - 2030

- Industry: Technology

- Report Summary

- Table of Contents

- Segmentation

- Methodology

- Download FREE Sample

-

Download Sample Report

Content Detection Market Summary

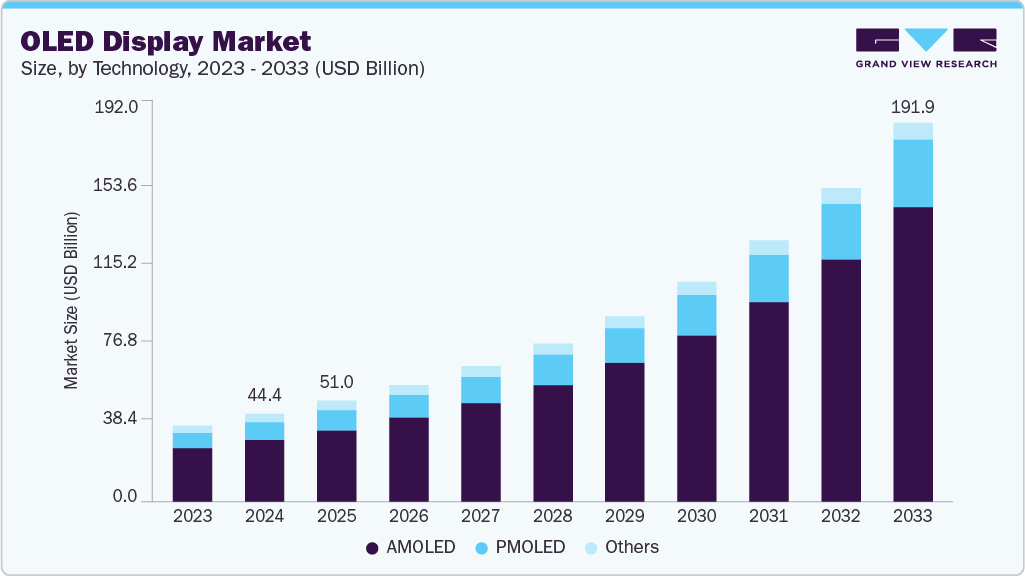

The global content detection market size was valued at USD 17.35 billion in 2024 and is projected to reach USD 38.90 billion by 2030, growing at a CAGR of 14.5% from 2025 to 2030. Many online platforms face challenges in managing harmful content due to limited resources.

Key Market Trends & Insights

- North America content detection market dominated the global industry and accounted for a 38.7% share in 2024.

- The content detection market in Asia Pacific is anticipated to register the fastest CAGR over the forecast period.

- Based on detection approach, the content moderation segment dominated the industry with a 54.1% share in 2024.

- Based on content type, the text segment accounted for the largest market revenue share in 2024.

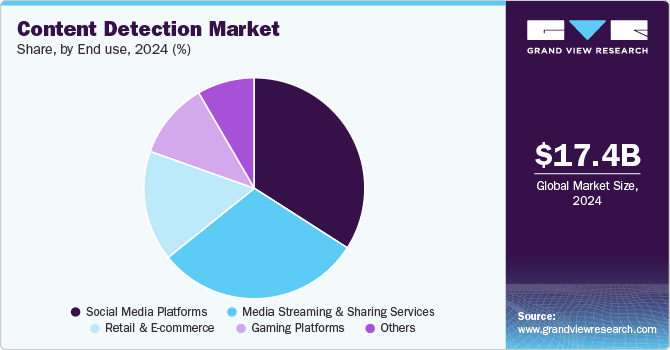

- Based on end use, the social media platforms segment generated the highest revenue in 2024.

Market Size & Forecast

- 2024 Market Size: USD 17.35 Billion

- 2030 Projected Market Size: USD 38.90 Billion

- CAGR (2025-2030): 14.5%

- North America: Largest market in 2024

- Asia Pacific: Fastest growing market

The availability of shared detection tools is helping them identify and respond to threats more effectively. This reduces the reliance on building expensive, in-house systems. It also allows these platforms to align more easily with growing safety regulations. As more adopt these tools, content detection is expanding across the digital space. Companies are increasingly integrating these solutions to strengthen trust and safety on their platforms. For instance, in July 2024, Jigsaw, Google’s in-house tech incubator, in collaboration with Tech Against Terrorism and the Global Internet Forum to Counter Terrorism (GIFCT), open-sourced a tool called Altitude to help smaller companies detect and manage extremist content by consolidating flagged material from trusted databases. Altitude enables human moderators to efficiently identify terrorist and violent extremist content without automated removal, supporting compliance with regulations such as the Digital Services Act.

Content detection is moving toward smarter, AI-driven systems. These tools can recognize patterns and spot unusual activity quickly. They work in real time, allowing faster responses to potential threats. This reduces the need for constant manual checks by human teams. As detection becomes more automated, platforms can manage larger volumes of data without sacrificing accuracy. This change is helping organizations improve security while saving time and resources. Companies are already adopting such systems to strengthen their digital safety and response capabilities. For instance, in September 2024, TATA Consultancy Services Limited expanded its partnership with Google Cloud to launch AI-powered cybersecurity solutions, including Managed Detection and Response and Secure Cloud Foundation, to enhance threat detection and cloud security across diverse environments. These solutions use AI, machine learning, and automation to enable real-time monitoring, improve response capabilities, and help enterprises build cyber-resilient operations.

Detection systems are becoming more comprehensive in scope as they now analyze text, audio, images, and video together. This shift toward unified, multimodal models is improving accuracy across different content types. By integrating multiple formats, these systems can detect inconsistencies and manipulations more effectively. This approach is especially useful in identifying synthetic and AI-generated media, which often involve subtle alterations across various formats. It also strengthens cross-platform content verification, making detection efforts more reliable. The integration of multimodal analysis enhances the robustness of content detection workflows. As manipulation techniques grow more complex, relying on a single type of data is no longer sufficient. Multimodal models offer layered insights that increase the chances of flagging misleading or harmful content before it spreads widely.

Detection Approach Insights

In terms of detection approach, the content moderation segment dominated the industry with a 54.1% share in 2024. Content moderation focuses on identifying harmful, misleading, or inappropriate content across various formats, such as text, images, videos, and live streams. These systems often combine AI-driven automation with human review to ensure accuracy and context-aware decisions. Content moderation is essential for platforms to comply with regulations and maintain user trust. Its broad application across social media, streaming services, and online communities has made it a central component of detection strategies. As a result, it continues to lead in both adoption and investment within the content detection space.

AI content verification is experiencing significant growth in the content detection market due to rising concerns around misinformation, deepfakes, and synthetic media. Organizations are increasingly adopting AI tools to verify the authenticity of digital content at scale. These tools can detect subtle patterns, inconsistencies, or traces of manipulation that are difficult for humans to spot. The growing volume of user-generated content and the speed at which it spreads online have amplified the need for automated verification solutions. Regulatory pressure and platform accountability are also pushing companies to strengthen their verification systems. As a result, AI-driven verification is emerging as a key area of investment and innovation within the broader content detection ecosystem.

Content Type Insights

The text segment accounted for the largest market revenue share in 2024. This was driven by the widespread use of written content across social media, news platforms, and online forums. Increased concerns over misinformation, hate speech, and spam boosted demand for text-based detection tools. Natural Language Processing (NLP) advancements also enhanced the accuracy and efficiency of detection systems. As a result, organizations prioritized text analysis to manage and moderate large volumes of content effectively. The scalability of AI-based text detection makes it suitable for real-time monitoring and automated flagging. In addition, regulatory requirements around digital communication and platform accountability have fueled further investment in text-focused solutions.

The video segment is predicted to foresee significant growth in the forecast period due to the surge in video content across social platforms and streaming services. Deepfake technology and synthetic media have raised concerns over video authenticity. Detection tools are evolving to identify manipulations such as splicing, face swaps, and AI-generated footage. Demand for real-time video moderation is increasing, especially in live-streamed content. Organizations are increasingly adopting advanced video analysis tools. This shift aims to ensure the integrity and authenticity of video content.

End Use Insights

Social media platforms generated the highest revenue in 2024 due to the vast amount of user-generated content shared daily. These platforms face constant challenges related to misinformation, harassment, hate speech, and harmful or inappropriate content. As a result, they heavily invest in detection and moderation technologies to maintain a safe and trustworthy environment. Real-time monitoring and AI-driven automated tools have become essential to manage the high volume and velocity of content. In addition, regulatory pressures and evolving compliance standards compel platforms to strengthen their detection systems. The need to protect users, maintain platform integrity, and meet legal obligations continues to drive significant investment and innovation in this segment.

The media streaming and sharing services are expected to witness strong growth during the forecast period due to the increasing volume of video and audio content being uploaded and consumed globally. These platforms require advanced content detection tools to manage copyright issues, deepfakes, and inappropriate material. As user engagement grows, the demand for real-time moderation and automated verification systems is rising. AI-powered solutions help identify manipulated or misleading content quickly and accurately. Regulatory frameworks are also pressuring these services to improve content governance. This combination of user demand, content risk, and compliance needs is driving the segment’s rapid expansion.

Regional Insights

North America content detection market dominated the global industry and accounted for a 38.7% share in 2024. The North American industry is driven by advanced digital ecosystems and a high concentration of tech companies. The region benefits from strong technological infrastructure and high digital content consumption across sectors such as media, education, and finance. Enterprises are increasingly deploying AI-powered tools to manage misinformation, offensive content, and security threats. Collaboration between private companies and public institutions is also encouraging innovation in detection capabilities. Content moderation is becoming a standard operational requirement for platforms across industries.

U.S. Content Detection Market Trends

The content detection market in the U.S. is supported by a highly competitive tech landscape and active R&D investment. Tech giants are continuously advancing detection tools for social media, e-commerce, and streaming services to manage content authenticity and platform safety. Concerns over misinformation, political manipulation, and synthetic media are driving both public and private sector demand. Regulatory scrutiny is increasing, prompting platforms to integrate more transparent and automated content management systems.

Europe Content Detection Market Trends

The content detection market in Europe shows steady growth, underpinned by robust legal frameworks such as the Digital Services Act and the General Data Protection Regulation (GDPR). The region places a strong emphasis on content accountability, privacy, and ethical AI use. Platforms operating in Europe are under pressure to monitor and remove illegal or harmful content quickly and transparently. Public concern over user safety, digital misinformation, and media manipulation has led to greater reliance on AI-powered and multilingual detection tools.

Asia Pacific Content Detection Market Trends

The content detection market in Asia Pacific is anticipated to register the fastest CAGR over the forecast period. Asia Pacific is witnessing fast-paced growth in content detection adoption, driven by rising internet penetration, mobile-first usage patterns, and the explosion of digital content. Countries such as India, China, Japan, and South Korea are key contributors to market expansion, supported by strong government interest in controlling misinformation and managing digital ecosystems. The region faces unique challenges due to linguistic diversity and the volume of content generated daily. Local platforms are increasingly investing in scalable, AI-enabled detection systems capable of real-time analysis and moderation.

Key Content Detection Company Insights

Some of the key companies in the content detection industry include Amazon Web Services, Inc., Clarifai, Inc., Cogito Tech, and Google LLC. Organizations are focusing on increasing customer base to gain a competitive edge in the industry. Therefore, key players are taking several strategic initiatives, such as mergers and acquisitions and partnerships with other major companies.

-

Clarifai focuses on multimodal content detection using computer vision, natural language processing, and audio analysis. The company provides AI-powered tools that detect explicit, violent, or harmful content in images, videos, and text. Its platform supports customizable workflows, allowing users to train and deploy models specific to their moderation needs. Clarifai’s solutions are used across media, government, and e-commerce sectors. Its recent developments include improved zero-shot learning and model efficiency for real-time moderation.

-

Cogito Tech emphasizes human-in-the-loop data annotation to support AI content detection systems. It provides high-quality labeled datasets used to train models for identifying offensive language, hate speech, explicit images, and more. The company works with enterprises to build and refine content detection algorithms by delivering accurate text, image, and video annotations. Cogito also offers multilingual moderation capabilities, important for platforms with global audiences. Their recent efforts focus on enhancing annotation accuracy and incorporating context-aware labeling techniques.

Key Content Detection Companies:

The following are the leading companies in the content detection market. These companies collectively hold the largest market share and dictate industry trends.

- ActiveFence

- Amazon Web Services, Inc.

- Clarifai, Inc.

- Cogito Tech

- Google LLC

- Hive

- Meta

- Microsoft

- Sensity

- Wipro

Recent Developments

-

In November 2024, Clarifai joined the Berkeley AI Research (BAIR) Open Research Commons to collaborate on advancing large-scale AI models and multimodal learning. This collaboration supports Clarifai’s efforts in applied AI, particularly in content moderation, computer vision, and ethical AI development.

-

In September 2024, Microsoft launched a feature called Correction within its Azure AI Content Safety API to tackle AI hallucinations by automatically identifying and rectifying false or misleading text generated by large language models. The feature utilizes both small and large language models to match responses with grounding documents, helping enhance the reliability and precision of generative AI, particularly in critical domains such as medicine.

-

In August 2024, Meta and Universal Music Group, a music corporation in the Netherlands, expanded their licensing agreement to include WhatsApp, marking a broader effort to support artists and songwriters across Meta’s platforms. As part of the deal, both companies committed to combating unauthorized AI-generated content to ensure fair compensation and protect human creativity.

Content Detection Market Report Scope

Report Attribute

Details

Market size value in 2025

USD 19.78 billion

Revenue forecast in 2030

USD 38.90 billion

Growth rate

CAGR of 14.5% from 2025 to 2030

Base year for estimation

2024

Historical data

2018 - 2023

Forecast period

2025 - 2030

Quantitative units

Revenue in USD million/billion and CAGR from 2025 to 2030

Report coverage

Revenue forecast, company ranking, competitive landscape, growth factors, and trends

Segment scope

Detection approach, content type, end use, region

Region scope

North America; Europe; Asia Pacific; Latin America; Middle East & Africa

Country scope

U.S.; Canada; Mexico; Germany; U.K.; France; China; Japan; India; Australia, South Korea, Brazil, KSA, UAE, South Africa

Key companies profiled

ActiveFence; Amazon Web Services, Inc.; Clarifai, Inc.; Cogito Tech; Google LLC; Hive; IBM Corporation; Microsoft; Meta; Sensity; Wipro

Customization scope

Free report customization (equivalent up to 8 analysts’ working days) with purchase. Addition or alteration to country, regional & segment scope

Pricing and purchase options

Avail customized purchase options to meet your exact research needs. Explore purchase options

Global Content Detection Market Report Segmentation

This report forecasts revenue growth at global, regional, and country levels and provides an analysis of the latest industry trends and opportunities in each of the sub-segments from 2018 to 2030. For this study, Grand View Research has segmented the global content detection market report based on detection approach, content type, end use, and region:

-

Detection Approach Outlook (Revenue, USD Million, 2018 - 2030)

-

AI Content Verification

-

Content Moderation

-

Plagiarism Detection

-

-

Content Type Outlook (Revenue, USD Million, 2018 - 2030)

-

Text

-

Image

-

Audio

-

Video

-

-

End Use Outlook (Revenue, USD Million, 2018 - 2030)

-

Social Media Platforms

-

Media Streaming & Sharing Services

-

Retail & E-commerce

-

Gaming Platforms

-

Others

-

-

Regional Outlook (Revenue, USD Million, 2018 - 2030)

-

North America

-

U.S.

-

Canada

-

Mexico

-

-

Europe

-

U.K.

-

Germany

-

France

-

-

Asia Pacific

-

China

-

Japan

-

India

-

Australia

-

South Korea

-

-

Latin America

-

Brazil

-

-

Middle East & Africa (MEA)

-

KSA

-

UAE

-

South Africa

-

-

Frequently Asked Questions About This Report

b. The global content detection market size was estimated at USD 17.35 billion in 2024 and is expected to reach USD 19.78 billion in 2025.

b. The global content detection market is expected to grow at a compound annual growth rate of 14.5% from 2025 to 2030 to reach USD 38.90 billion by 2030.

b. North America dominated the content detection market with a share of 38.7% in 2024, driven by the region's advanced technological infrastructure, high adoption rates of digital media, and strong demand for content security and compliance solutions across various industries.

b. Some key players operating in the content detection market include ActiveFence, Amazon Web Services, Inc., Clarifai, Inc., Cogito Tech, Google LLC, Hive, IBM Corporation, Microsoft, Meta, Sensity, and Wipro.

b. Key factors that are driving the market growth include rising demand for high-quality content, AI advancements, increased digital media adoption, enhanced content security, and the growth of streaming services and connected devices.

Share this report with your colleague or friend.

Need a Tailored Report?

Customize this report to your needs — add regions, segments, or data points, with 20% free customization.

ISO 9001:2015 & 27001:2022 Certified

We are GDPR and CCPA compliant! Your transaction & personal information is safe and secure. For more details, please read our privacy policy.

Trusted market insights - try a free sample

See how our reports are structured and why industry leaders rely on Grand View Research. Get a free sample or ask us to tailor this report to your needs.